December 31, 2021

New Paper

New paper out: "Accelerated primal-dual gradient method for smooth and convex-concave saddle-point problems with bilinear coupling" - joint work with Dmitry Kovalev and Alexander Gasnikov.

Abstract: In this paper we study a convex-concave saddle-point problem min_x max_y f(x) + y^T A x − g(y), where f(x) and g(y) are smooth and convex functions. We propose an Accelerated Primal-Dual Gradient Method for solving this problem which (i) achieves an optimal linear convergence rate in the strongly-convex-strongly-concave regime matching the lower complexity bound (Zhang et al., 2021) and (ii) achieves an accelerated linear convergence rate in the case when only one of the functions f(x) and g(y) is strongly convex or even none of them are. Finally, we obtain a linearly-convergent algorithm for the general smooth and convex-concave saddle point problem min_x max_y F(x,y) without requirement of strong convexity or strong concavity.

December 24, 2021

New Paper

New paper out: "Faster rates for compressed federated learning with client-variance reduction" - joint work with Haoyu Zhao, Konstantin Burlachenko and Zhize Li.

December 7, 2021

New Paper

New paper out: "FL_PyTorch: optimization research simulator for federated learning" - joint work with Konstantin Burlachenko and Samuel Horváth.

Abstract: Federated Learning (FL) has emerged as a promising technique for edge devices to collaboratively learn a shared machine learning model while keeping training data locally on the device, thereby removing the need to store and access the full data in the cloud. However, FL is difficult to implement, test and deploy in practice considering heterogeneity in common edge device settings, making it fundamentally hard for researchers to efficiently prototype and test their optimization algorithms. In this work, our aim is to alleviate this problem by introducing FL_PyTorch : a suite of open-source software written in python that builds on top of one the most popular research Deep Learning (DL) framework PyTorch. We built FL_PyTorch as a research simulator for FL to enable fast development, prototyping and experimenting with new and existing FL optimization algorithms. Our system supports abstractions that provide researchers with a sufficient level of flexibility to experiment with existing and novel approaches to advance the state-of-the-art. Furthermore, FL_PyTorch is a simple to use console system, allows to run several clients simultaneously using local CPUs or GPU(s), and even remote compute devices without the need for any distributed implementation provided by the user. FL_PyTorch also offers a Graphical User Interface. For new methods, researchers only provide the centralized implementation of their algorithm. To showcase the possibilities and usefulness of our system, we experiment with several well-known state-of-the-art FL algorithms and a few of the most common FL datasets.

The paper is published in the Proceedings of the 2nd ACM International Workshop on Distributed Machine Learning.

December 7, 2021

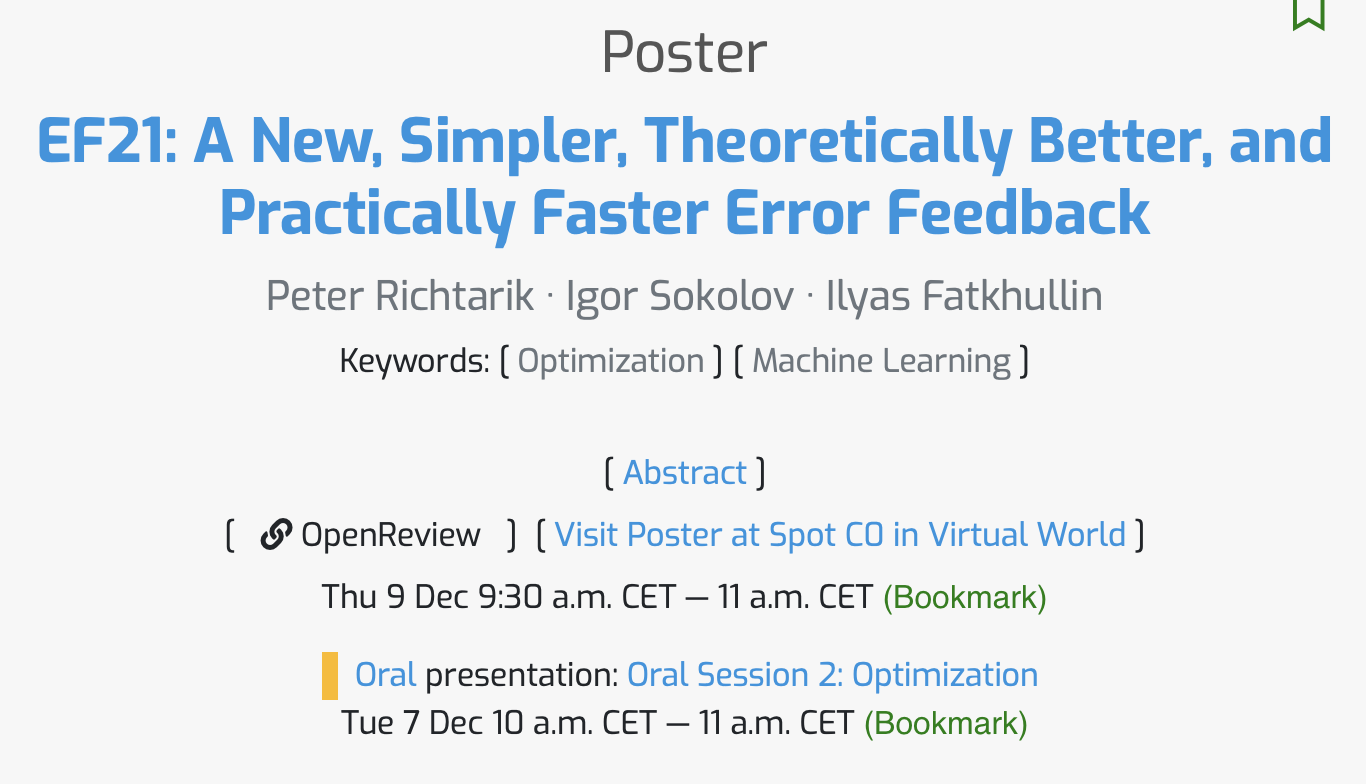

Oral Talk at NeurIPS 2021

Today I gave an oral talk at NeurIPS about the EF21 method. Come to our poster on Thursday! A longer version of the talk is on YouTube.

November 24, 2021

KAUST-GSAI Workshop

Today and tomorrow I am attending (and giving a talk at) the KAUST-GSAI Joint Workshop on Advances in AI.

November 22, 2021

New Paper

New paper out: "FLIX: A Simple and Communication-Efficient Alternative to Local Methods in Federated Learning" - joint work with Elnur Gasanov, Ahmed Khaled and Samuel Horváth.

Abstract: Federated Learning (FL) is an increasingly popular machine learning paradigm in which multiple nodes try to collaboratively learn under privacy, communication and multiple heterogeneity constraints. A persistent problem in federated learning is that it is not clear what the optimization objective should be: the standard average risk minimization of supervised learning is inadequate in handling several major constraints specific to federated learning, such as communication adaptivity and personalization control. We identify several key desiderata in frameworks for federated learning and introduce a new framework, FLIX, that takes into account the unique challenges brought by federated learning. FLIX has a standard finite-sum form, which enables practitioners to tap into the immense wealth of existing (potentially non-local) methods for distributed optimization. Through a smart initialization that does not require any communication, FLIX does not require the use of local steps but is still provably capable of performing dissimilarity regularization on par with local methods. We give several algorithms for solving the FLIX formulation efficiently under communication constraints. Finally, we corroborate our theoretical results with extensive experimentation.

November 17, 2021

Samuel, Dmitry and Grigory won the 2021 CEMSE Research Excellence Award!

Today I am very proud and happy! Three of my students won the CEMSE Research Excellence Award at KAUST: Samuel Horváth (Statistics PhD student), Dmitry Kovalev (Computer Science PhD student) and Grigory Malinovsky (Applied Math and Computing Sciences MS student). The Statistics award is also known as the "Al-Kindi Research Excellence Award".

The award comes with a 1,000 USD cash prize for each. Congratulations to all of you, well deserved!

November 10, 2021

Talk at the SMAP Colloquium at the University of Portsmouth, United Kingdom

Today I gave a 1hr research talk on the EF21 method at the SMAP Colloquium, University of Portsmouth, UK.

November 2, 2021

New Paper

New paper out: "Basis Matters: Better Communication-Efficient Second Order Methods for Federated Learning" - joint work with Xun Qian, Rustem Islamov and Mher Safaryan.

Abstract: Recent advances in distributed optimization have shown that Newton-type methods with proper communication compression mechanisms can guarantee fast local rates and low communication cost compared to first order methods. We discover that the communication cost of these methods can be further reduced, sometimes dramatically so, with a surprisingly simple trick: {\em Basis Learn (BL)}. The idea is to transform the usual representation of the local Hessians via a change of basis in the space of matrices and apply compression tools to the new representation. To demonstrate the potential of using custom bases, we design a new Newton-type method (BL1), which reduces communication cost via both {\em BL} technique and bidirectional compression mechanism. Furthermore, we present two alternative extensions (BL2 and BL3) to partial participation to accommodate federated learning applications. We prove local linear and superlinear rates independent of the condition number. Finally, we support our claims with numerical experiments by comparing several first and second~order~methods.

November 1, 2021

Talk at the CS Graduate Seminar at KAUST

Today I am giving a talk in the CS Graduate Seminar at KAUST.

October 25, 2021

Talk at KInIT

Today at 15:30 I am giving a research talk at the Kempelen Institute of Intelligent Technologies (KInIT), Slovakia.

October 22, 2021

Talk at "Matfyz"

Today at 15:30 I am giving a talk in the machine learning seminar at "Matfyz", Comenius University, Slovakia. I will talk about the paper "EF21: A new, simpler, theoretically better, and practically faster error feedback" which was recently accepted to NeurIPS 2021 as an oral paper (less than 1% acceptance rate from more than 9000 paper submissions). The paper is joint work with Igor Sokolov and Ilyas Fatkhullin.

With an extended set of coauthors, we have recently written a follow up paper with many major extensions of the EF21 method; you may wish to look at this as well: "EF21 with Bells & Whistles: Practical Algorithmic Extensions of Modern Error Feedback". This second paper is joint work with Ilyas Fatkhullin, Igor Sokolov, Eduard Gorbunov, and Zhize Li.

October 20, 2021

Paper Accepted to Frontiers in Signal Processing

The paper "Distributed proximal splitting algorithms with rates and acceleration", joint work with Laurent Condat and Grigory Malinovsky, was accepted to Frontiers in Signal Processing, section Signal Processing for Communications. The paper is is a part of a special issue ("research topic" in the language of Frontiers) dedicated to "Distributed Signal Processing and Machine Learning for Communication Networks".

October 15, 2021

2 Students Received the 2021 NeurIPS Outstanding Reviewer Award

Congratulations to Eduard Gorbunov and Konstantin Mishchenko who received the 2021 NeurIPS Outstanding Reviewer Award given to the top 8% reviewers, as judged by the conference chairs!

October 9, 2021

New Paper

New paper out: "Distributed Methods with Compressed Communication for Solving Variational Inequalities, with Theoretical Guarantees" - joint work with Aleksandr Beznosikov, Michael Diskin, Max Ryabinin and Alexander Gasnikov.

Abstract: Variational inequalities in general and saddle point problems in particular are increasingly relevant in machine learning applications, including adversarial learning, GANs, transport and robust optimization. With increasing data and problem sizes necessary to train high performing models across these and other applications, it is necessary to rely on parallel and distributed computing. However, in distributed training, communication among the compute nodes is a key bottleneck during training, and this problem is exacerbated for high dimensional and over-parameterized models models. Due to these considerations, it is important to equip existing methods with strategies that would allow to reduce the volume of transmitted information during training while obtaining a model of comparable quality. In this paper, we present the first theoretically grounded distributed methods for solving variational inequalities and saddle point problems using compressed communication: MASHA1 and MASHA2. Our theory and methods allow for the use of both unbiased (such as RandK; MASHA1) and contractive (such as TopK; MASHA2) compressors. We empirically validate our conclusions using two experimental setups: a standard bilinear min-max problem, and large-scale distributed adversarial training of transformers.

October 8, 2021

New Paper

New paper out: "Permutation Compressors for Provably Faster Distributed Nonconvex Optimization" - joint work with Rafał Szlendak and Alexander Tyurin.

Abstract: We study the MARINA method of Gorbunov et al (ICML, 2021) -- the current state-of-the-art distributed non-convex optimization method in terms of theoretical communication complexity. Theoretical superiority of this method can be largely attributed to two sources: the use of a carefully engineered biased stochastic gradient estimator, which leads to a reduction in the number of communication rounds, and the reliance on independent stochastic communication compression operators, which leads to a reduction in the number of transmitted bits within each communication round. In this paper we i) extend the theory of MARINA to support a much wider class of potentially correlated compressors, extending the reach of the method beyond the classical independent compressors setting, ii) show that a new quantity, for which we coin the name Hessian variance, allows us to significantly refine the original analysis of MARINA without any additional assumptions, and iii) identify a special class of correlated compressors based on the idea of random permutations, for which we coin the term PermK, the use of which leads to $O(\sqrt{n})$ (resp. $O(1 + d/\sqrt{n})$) improvement in the theoretical communication complexity of MARINA in the low Hessian variance regime when $d\geq n$ (resp. $d \leq n$), where $n$ is the number of workers and $d$ is the number of parameters describing the model we are learning. We corroborate our theoretical results with carefully engineered synthetic experiments with minimizing the average of nonconvex quadratics, and on autoencoder training with the MNIST dataset.

October 7, 2021

New Paper

New paper out: "EF21 with Bells & Whistles: Practical Algorithmic Extensions of Modern Error Feedback" - joint work with Ilyas Fatkhullin, Igor Sokolov, Eduard Gorbunov and Zhize Li.

Abstract: First proposed by Seide et al (2014) as a heuristic, error feedback (EF) is a very popular mechanism for enforcing convergence of distributed gradient-based optimization methods enhanced with communication compression strategies based on the application of contractive compression operators. However, existing theory of EF relies on very strong assumptions (e.g., bounded gradients), and provides pessimistic convergence rates (e.g., while the best known rate for EF in the smooth nonconvex regime, and when full gradients are compressed, is O(1/T^{2/3}), the rate of gradient descent in the same regime is O(1/T). Recently, Richt\'{a}rik et al (2021) proposed a new error feedback mechanism, EF21, based on the construction of a Markov compressor induced by a contractive compressor. EF21 removes the aforementioned theoretical deficiencies of EF and at the same time works better in practice. In this work we propose six practical extensions of EF21: partial participation, stochastic approximation, variance reduction, proximal setting, momentum and bidirectional compression. Our extensions are supported by strong convergence theory in the smooth nonconvex and also Polyak-Łojasiewicz regimes. Several of these techniques were never analyzed in conjunction with EF before, and in cases where they were (e.g., bidirectional compression), our rates are vastly superior.

October 5, 2021

2020 COAP Best Paper Award

We have just received this email:

Your paper "Momentum and stochastic momentum for stochastic gradient, Newton, proximal point and subspace descent methods" published in Computational Optimization and Applications was voted by the editorial board as the best paper appearing in the journal in 2020. There were 93 papers in the 2020 competition. Congratulations!

The paper is joint work with Nicolas Loizou.

October 4, 2021

Konstantin Mishchenko Defended his PhD Thesis

Konstantin Mishchenko defended his PhD thesis "On Seven Fundamental Optimization Challenges in Machine Learning" today.

Having started in Fall 2017 (I joined KAUST in March of the same year), Konstantin is my second PhD student to graduate from KAUST. Konstantin has done some absolutely remarkable research, described by the committee (Suvrit Sra, Wotao Yin, Lawrence Carin, Bernard Ghanem and myself) in the following way: "The committee commends Konstantin Mishchenko on his outstanding achievements, including research creativity, depth of technical/mathematical results, volume of published work, service to the community, and a particularly lucid presentation and defense of his thesis".

Konstantin wrote more than 20 papers and his works attracted more than 500 citations during his PhD. Konstantin's next destination is a postdoctoral fellowship position with Alexander d'Aspremont and Francis Bach at INRIA. Congratulations, Konstantin!

September 29, 2021

Papers Accepted to NeurIPS 2021

We've had several papers accepted to the 35th Annual Conference on Neural Information Processing Systems (NeurIPS 2021), which will be run virtually during December 6-14, 2021. Here they are:

1) "EF21: A New, Simpler, Theoretically Better, and Practically Faster Error Feedback" [arXiv] - joint work with Igor Sokolov and Ilyas Fatkhullin.

This paper was accepted as an ORAL PAPER (less than 1% of all submissions).

Further links:

2) "CANITA: Faster Rates for Distributed Convex Optimization with Communication Compression" [arXiv] - joint work with Zhize Li.

3) "Smoothness Matrices Beat Smoothness Constants: Better Communication Compression Techniques for Distributed Optimization" [arXiv] - joint work with Mher Safaryan and Filip Hanzely.

4) "Error Compensated Distributed SGD can be Accelerated" [arXiv] - joint work with Xun Qian and Tong Zhang.

5) "Lower Bounds and Optimal Algorithms for Smooth and Strongly Convex Decentralized Optimization Over Time-Varying Networks" [arXiv] - joint work with Dmitry Kovalev, Elnur Gasanov and Alexander Gasnikov.

6) "FjORD: Fair and Accurate Federated Learning Under Heterogeneous Targets with Ordered Dropout" [arXiv] - the work of Samuel Horváth, Stefanos Laskaridis, Mario Almeida, Ilias Leontiadis, Stylianos I. Venieris and Nicholas D. Lane.

7) "Moshpit SGD: Communication-Efficient Decentralized Training on Heterogeneous Unreliable Devices" [arXiv] - the work of Max Ryabinin, Eduard Gorbunov, Vsevolod Plokhotnyuk and Gennady Pekhimenko.

September 23, 2021

New Paper

New paper out: "Error Compensated Loopless SVRG, Quartz, and SDCA for Distributed Optimization" - joint work with Xun Qian, Hanze Dong, and Tong Zhang.

Abstract: The communication of gradients is a key bottleneck in distributed training of large scale machine learning models. In order to reduce the communication cost, gradient compression (e.g., sparsification and quantization) and error compensation techniques are often used. In this paper, we propose and study three new efficient methods in this space: error compensated loopless SVRG method (EC-LSVRG), error compensated Quartz (EC-Quartz), and error compensated SDCA (EC-SDCA). Our method is capable of working with any contraction compressor (e.g., TopK compressor), and we perform analysis for convex optimization problems in the composite case and smooth case for EC-LSVRG. We prove linear convergence rates for both cases and show that in the smooth case the rate has a better dependence on the parameter associated with the contraction compressor. Further, we show that in the smooth case, and under some certain conditions, error compensated loopless SVRG has the same convergence rate as the vanilla loopless SVRG method. Then we show that the convergence rates of EC-Quartz and EC-SDCA in the composite case are as good as EC-LSVRG in the smooth case. Finally, numerical experiments are presented to illustrate the efficiency of our methods.

September 11, 2021

New Paper

New paper out: "Doubly Adaptive Scaled Algorithm for Machine Learning Using Second-Order Information" - joint work with Majid Jahani, Sergey Rusakov, Zheng Shi, Michael W. Mahoney, and Martin Takáč.

Abstract: We present a novel adaptive optimization algorithm for large-scale machine learning problems. Equipped with a low-cost estimate of local curvature and Lipschitz smoothness, our method dynamically adapts the search direction and step-size. The search direction contains gradient information preconditioned by a well-scaled diagonal preconditioning matrix that captures the local curvature information. Our methodology does not require the tedious task of learning rate tuning, as the learning rate is updated automatically without adding an extra hyperparameter. We provide convergence guarantees on a comprehensive collection of optimization problems, including convex, strongly convex, and nonconvex problems, in both deterministic and stochastic regimes. We also conduct an extensive empirical evaluation on standard machine learning problems, justifying our algorithm's versatility and demonstrating its strong performance compared to other start-of-the-art first-order and second-order methods.

August 29, 2021

Fall 2021 Semester Started

The Fall semester has started at KAUST today; I am teaching CS 331: Stochastic Gradient Descent Methods.Brief course blurb: Stochastic gradient descent (SGD) in one or another of its many variants is the workhorse method for training modern supervised machine learning models. However, the world of SGD methods is vast and expanding, which makes it hard for practitioners and even experts to understand its landscape and inhabitants. This course is a mathematically rigorous and comprehensive introduction to the field, and is based on the latest results and insights. The course develops a convergence and complexity theory for serial, parallel, and distributed variants of SGD, in the strongly convex, convex and nonconvex setup, with randomness coming from sources such as subsampling and compression. Additional topics such as acceleration via Nesterov momentum or curvature information will be covered as well. A substantial part of the course offers a unified analysis of a large family of variants of SGD which have so far required different intuitions, convergence analyses, have different applications, and which have been developed separately in various communities. This framework includes methods with and without the following tricks, and their combinations: variance reduction, data sampling, coordinate sampling, arbitrary sampling, importance sampling, mini-batching, quantization, sketching, dithering and sparsification.

August 11, 2021

New Paper

New paper out: "FedPAGE: A Fast Local Stochastic Gradient Method for Communication-Efficient Federated Learning" - joint work with Haoyu Zhao and Zhize Li.Abstract: Federated Averaging (FedAvg, also known as Local-SGD) [McMahan et al., 2017] is a classical federated learning algorithm in which clients run multiple local SGD steps before communicating their update to an orchestrating server. We propose a new federated learning algorithm, FedPAGE, able to further reduce the communication complexity by utilizing the recent optimal PAGE method [Li et al., 2021] instead of plain SGD in FedAvg. We show that FedPAGE uses much fewer communication rounds than previous local methods for both federated convex and nonconvex optimization. Concretely, 1) in the convex setting, the number of communication rounds of FedPAGE is $O(\frac{N^{3/4}}{S\epsilon})$, improving the best-known result $O(\frac{N}{S\epsilon})$ of SCAFFOLD [Karimireddy et al., 2020] by a factor of $N^{1/4}$, where $N$ is the total number of clients (usually is very large in federated learning), $S$ is the sampled subset of clients in each communication round, and $\epsilon$ is the target error; 2) in the nonconvex setting, the number of communication rounds of FedPAGE is $O(\frac{\sqrt{N}+S}{S\epsilon^2})$, improving the best-known result $O(\frac{N^{2/3}}{S^{2/3}\epsilon^2})$ of SCAFFOLD by a factor of $N^{1/6}S^{1/3}$ if the sampled clients $S\leq \sqrt{N}$. Note that in both settings, the communication cost for each round is the same for both FedPAGE and SCAFFOLD. As a result, FedPAGE achieves new state-of-the-art results in terms of communication complexity for both federated convex and nonconvex optimization.

July 20, 2021

New Paper

New paper out: "CANITA: Faster Rates for Distributed Convex Optimization with Communication Compression" - joint work with Zhize Li.

In this work we develop and analyze the first distributed gradient method capable in the convex regime of benefiting from communication compression and acceleration/momentum at the same time. The strongly convex regime was first handled in the ADIANA paper (ICML 2020), and the nonconvex regime in the MARINA paper (ICML 2021).

July 20, 2021

Talk at SIAM Conference on Optimization

Today I gave a talk in the Recent Advancements in Optimization Methods for Machine Learning - Part I of III minisymposium at the SIAM Conference on Optimization. The conference was originally supposed to take place in Hong Kong in 2020, but due to the Covid-19 situation, this was not to be. Instead, the event is happening this year, and virtually. I was on the organizing committee for the conference, jointly resposible for inviting plenary and tutorial speakers.

July 12, 2021

Optimization Without Borders Conference (Nesterov 65)

Today I am giving a talk at the "Optimization Without Borders" conference, organized in the honor of Yurii Nesterov's 65th Birthday. This is a hybrid event, with online and offline participants. The offline part takes place at the Sirius University in Sochi, Russia.

Other speakers at the event (in order of giving talks at the event): Gasnikov, Nesterov, myself, Spokoiny, Mordukhovich, Bolte, Belomestny, Srebro, Zaitseva, Protasov, Shikhman, d'Aspremont, Polyak, Taylor, Stich, Teboulle, Lasserre, Nemirovski, Vorobiev, Yanitsky, Bakhurin, Dudorov, Molokov, Gornov, Rogozin, Hildebrand, Dvurechensky, Moulines, Juditsky, Sidford, Tupitsa, Kamzolov, and Anikin.

July 5, 2021

New Postdoc: Alexander Tyurin

Alexander Tyurin has joined my Optimization and Machine Learning lab as a postdoc. Welcome!!!

Alexander obtained his PhD from the Higher School of Economics (HSE) in December 2020, under the supervision of Alexander Gasnikov, with the thesis "Development of a method for solving structural optimization problems". His 15 research papers can be found on Google Scholar. He has a masters degree in CS from HSE (2017), with a GPA of 9.84 / 10, and a BS degree in Computational Mathematics and Cybernetics from Lomonosov Moscow State University, with a GPA of 4.97 / 5.

During his studies, and for a short period of time after his PhD, Alexander worked as a research and development engineer in the Yandex self-driving cars team, where he was developing real-time algorithms for dynamic and static objects detection in a perception team for self-driving cars Using lidar (3D point clouds) and cameras (images) sensors. His primary responsibilities there ranged from the creation of datasets, throught research (Python, SQL, MapReduce) and implementation of the proposed algorithms (C++). Prior to this, he was a Research Engineer at VisionLabs in Moscow where he developed a face recognition algorithm that achieved a top 2 result in the FRVT NIST international competition.

July 4, 2021

Two New Interns

Two new people joined my team as Summer research interns:

Rafał Szlendak joined as an undergraduate intern. Rafal is studying towards a BSc degree in Mathematics and Statistics at the University of Warwick, United Kingdom. He was involved in a research project entitled "Properties and characterisations of sequences generated by weighted context-free grammars with one terminal symbol". Among Rafal’s successes belong

- Ranked #1 in the Mathematics and Statistics Programme at Warwick, 2020

- Finalist, Polish National Mathematical Olympiad, 2017 and 2018

- Member of MATEX: an experimental mathematics programme for gifted students. This high school was ranked the top high school in Poland in the 2019 Perspektywy ranking.

Muhammad Harun Ali Khan joined as an undergraduate intern. Harun is a US citizen of Pakistani ancestry, and studies towards a BSc degree in Mathematics at Imperial College London. He has interests in number theory, artificial intelligence and doing mathematics via the Lean proof assistant. Harun is the Head of Imperial College mathematics competition problem selection committee. Harun has been active in various mathematics competitions at high school and university level. Some of his most notable recognitions and awards include

- 2nd Prize, International Mathematics Competition for University Students, 2020

- Imperial College UROP Prize (for formalizing Fibonacci Squares in Lean)

- Imperial College Mathematics Competition, First Place in First Round

- Bronze Medal, International Mathematical Olympiad, United Kingdom, 2019

- Bronze Medal, Asian Pacific Mathematics Olympiad, 2019

- Honorable Mention, International Mathematical Olympiad, Romania, 2018

- Honorable Mention, International Mathematical Olympiad, Brazil, 2017

June 9, 2021

New Paper

New paper out: "EF21: A New, Simpler, Theoretically Better, and Practically Faster Error Feedback" - joint work with Igor Sokolov and Ilyas Fatkhullin.

Abstract: Error feedback (EF), also known as error compensation, is an immensely popular convergence stabilization mechanism in the context of distributed training of supervised machine learning models enhanced by the use of contractive communication compression mechanisms, such as Top-k. First proposed by Seide et al (2014) as a heuristic, EF resisted any theoretical understanding until recently [Stich et al., 2018, Alistarh et al., 2018]. However, all existing analyses either i) apply to the single node setting only, ii) rely on very strong and often unreasonable assumptions, such global boundedness of the gradients, or iterate-dependent assumptions that cannot be checked a-priori and may not hold in practice, or iii) circumvent these issues via the introduction of additional unbiased compressors, which increase the communication cost. In this work we fix all these deficiencies by proposing and analyzing a new EF mechanism, which we call EF21, which consistently and substantially outperforms EF in practice. Our theoretical analysis relies on standard assumptions only, works in the distributed heterogeneous data setting, and leads to better and more meaningful rates. In particular, we prove that EF21 enjoys a fast O(1/T) convergence rate for smooth nonconvex problems, beating the previous bound of O(1/T^{2/3}), which was shown a bounded gradients assumption. We further improve this to a fast linear rate for PL functions, which is the first linear convergence result for an EF-type method not relying on unbiased compressors. Since EF has a large number of applications where it reigns supreme, we believe that our 2021 variant, EF21, can a large impact on the practice of communication efficient distributed learning.

June 8, 2021

New Paper

New paper out: "Lower Bounds and Optimal Algorithms for Smooth and Strongly Convex Decentralized Optimization Over Time-Varying Networks" - joint work with Dmitry Kovalev, Elnur Gasanov and Alexander Gasnikov.

Abstract: We consider the task of minimizing the sum of smooth and strongly convex functions stored in a decentralized manner across the nodes of a communication network whose links are allowed to change in time. We solve two fundamental problems for this task. First, we establish the first lower bounds on the number of decentralized communication rounds and the number of local computations required to find an ϵ-accurate solution. Second, we design two optimal algorithms that attain these lower bounds: (i) a variant of the recently proposed algorithm ADOM (Kovalev et al., 2021) enhanced via a multi-consensus subroutine, which is optimal in the case when access to the dual gradients is assumed, and (ii) a novel algorithm, called ADOM+, which is optimal in the case when access to the primal gradients is assumed. We corroborate the theoretical efficiency of these algorithms by performing an experimental comparison with existing state-of-the-art methods.

June 6, 2021

New Paper

New paper out: "Smoothness-Aware Quantization Techniques" - joint work with Bokun Wang, and Mher Safaryan.

Abstract: Distributed machine learning has become an indispensable tool for training large supervised machine learning models. To address the high communication costs of distributed training, which is further exacerbated by the fact that modern highly performing models are typically overparameterized, a large body of work has been devoted in recent years to the design of various compression strategies, such as sparsification and quantization, and optimization algorithms capable of using them. Recently, Safaryan et al (2021) pioneered a dramatically different compression design approach: they first use the local training data to form local "smoothness matrices", and then propose to design a compressor capable of exploiting the smoothness information contained therein. While this novel approach leads to substantial savings in communication, it is limited to sparsification as it crucially depends on the linearity of the compression operator. In this work, we resolve this problem by extending their smoothness-aware compression strategy to arbitrary unbiased compression operators, which also includes sparsification. Specializing our results to quantization, we observe significant savings in communication complexity compared to standard quantization. In particular, we show theoretically that block quantization with n blocks outperforms single block quantization, leading to a reduction in communication complexity by an O(n) factor, where n is the number of nodes in the distributed system. Finally, we provide extensive numerical evidence that our smoothness-aware quantization strategies outperform existing quantization schemes as well the aforementioned smoothness-aware sparsification strategies with respect to all relevant success measures: the number of iterations, the total amount of bits communicated, and wall-clock time.

June 6, 2021

New Paper

New paper out: "Complexity Analysis of Stein Variational Gradient Descent under Talagrand's Inequality T1" - joint work with Adil Salim, and Lukang Sun.

Abstract: We study the complexity of Stein Variational Gradient Descent (SVGD), which is an algorithm to sample from π(x)∝exp(−F(x)) where F smooth and nonconvex. We provide a clean complexity bound for SVGD in the population limit in terms of the Stein Fisher Information (or squared Kernelized Stein Discrepancy), as a function of the dimension of the problem d and the desired accuracy ε. Unlike existing work, we do not make any assumption on the trajectory of the algorithm. Instead, our key assumption is that the target distribution satisfies Talagrand's inequality T1.

June 6, 2021

New Paper

New paper out: "MURANA: A Generic Framework for Stochastic Variance-Reduced Optimization" - joint work with Laurent Condat.

Abstract: We propose a generic variance-reduced algorithm, which we call MUltiple RANdomized Algorithm (MURANA), for minimizing a sum of several smooth functions plus a regularizer, in a sequential or distributed manner. Our method is formulated with general stochastic operators, which allow us to model various strategies for reducing the computational complexity. For example, MURANA supports sparse activation of the gradients, and also reduction of the communication load via compression of the update vectors. This versatility allows MURANA to cover many existing randomization mechanisms within a unified framework. However, MURANA also encodes new methods as special cases. We highlight one of them, which we call ELVIRA, and show that it improves upon Loopless SVRG.

June 5, 2021

New Paper

New paper out: "FedNL: Making Newton-Type Methods Applicable to Federated Learning" - joint work with Mher Safaryan, Rustem Islamov and Xun Qian.

Abstract: Inspired by recent work of Islamov et al (2021), we propose a family of Federated Newton Learn (FedNL) methods, which we believe is a marked step in the direction of making second-order methods applicable to FL. In contrast to the aforementioned work, FedNL employs a different Hessian learning technique which i) enhances privacy as it does not rely on the training data to be revealed to the coordinating server, ii) makes it applicable beyond generalized linear models, and iii) provably works with general contractive compression operators for compressing the local Hessians, such as Top-K or Rank-R, which are vastly superior in practice. Notably, we do not need to rely on error feedback for our methods to work with contractive compressors. Moreover, we develop FedNL-PP, FedNL-CR and FedNL-LS, which are variants of FedNL that support partial participation, and globalization via cubic regularization and line search, respectively, and FedNL-BC, which is a variant that can further benefit from bidirectional compression of gradients and models, i.e., smart uplink gradient and smart downlink model compression. We prove local convergence rates that are independent of the condition number, the number of training data points, and compression variance. Our communication efficient Hessian learning technique provably learns the Hessian at the optimum. Finally, we perform a variety of numerical experiments that show that our FedNL methods have state-of-the-art communication complexity when compared to key baselines.

June 5, 2021

Finally, the NeurIPS Month of Deadlines is Over

I've been silent here for a while due a stream of NeurIPS deadlines (abstract, paper, supplementary material). Me and my fantastic team can rest a bit now!

May 10, 2021

Papers Accepted to ICML 2021

We've had several papers accepted to the International Conference on Machine Learning (ICML 2021), which will be run virtually during July 18-24, 2021. Here they are:1) "MARINA: Faster Non-convex Distributed Learning with Compression" [arXiv] [ICML] - joint work with Eduard Gorbunov, Konstantin Burlachenko and Zhize Li.

Abstract: We develop and analyze MARINA: a new communication efficient method for non-convex distributed learning over heterogeneous datasets. MARINA employs a novel communication compression strategy based on the compression of gradient differences which is reminiscent of but different from the strategy employed in the DIANA method of Mishchenko et al (2019). Unlike virtually all competing distributed first-order methods, including DIANA, ours is based on a carefully designed biased gradient estimator, which is the key to its superior theoretical and practical performance. To the best of our knowledge, the communication complexity bounds we prove for MARINA are strictly superior to those of all previous first order methods. Further, we develop and analyze two variants of MARINA: VR-MARINA and PP-MARINA. The first method is designed for the case when the local loss functions owned by clients are either of a finite sum or of an expectation form, and the second method allows for partial participation of clients -- a feature important in federated learning. All our methods are superior to previous state-of-the-art methods in terms of the oracle/communication complexity. Finally, we provide convergence analysis of all methods for problems satisfying the Polyak-Lojasiewicz condition.

More material:

- Short 5 min YouTube talk by Konstantin

- Long 70 min YouTube talk by Eduard delivered at the FLOW seminar

- poster

2) "PAGE: A Simple and Optimal Probabilistic Gradient Estimator for Nonconvex Optimization" [arXiv] [ICML] - joint work with Zhize Li, Hongyan Bao, and Xiangliang Zhang.

Abstract: In this paper, we propose a novel stochastic gradient estimator---ProbAbilistic Gradient Estimator (PAGE)---for nonconvex optimization. PAGE is easy to implement as it is designed via a small adjustment to vanilla SGD: in each iteration, PAGE uses the vanilla minibatch SGD update with probability p or reuses the previous gradient with a small adjustment, at a much lower computational cost, with probability 1−p. We give a simple formula for the optimal choice of p. We prove tight lower bounds for nonconvex problems, which are of independent interest. Moreover, we prove matching upper bounds both in the finite-sum and online regimes, which establish that PAGE is an optimal method. Besides, we show that for nonconvex functions satisfying the Polyak-Łojasiewicz (PL) condition, PAGE can automatically switch to a faster linear convergence rate. Finally, we conduct several deep learning experiments (e.g., LeNet, VGG, ResNet) on real datasets in PyTorch, and the results demonstrate that PAGE not only converges much faster than SGD in training but also achieves the higher test accuracy, validating our theoretical results and confirming the practical superiority of PAGE.

More material:

3) "Distributed Second Order Methods with Fast Rates and Compressed Communication" [arXiv] [ICML] - joint work with Rustem Islamov and Xun Qian.

Abstract: We develop several new communication-efficient second-order methods for distributed optimization. Our first method, NEWTON-STAR, is a variant of Newton's method from which it inherits its fast local quadratic rate. However, unlike Newton's method, NEWTON-STAR enjoys the same per iteration communication cost as gradient descent. While this method is impractical as it relies on the use of certain unknown parameters characterizing the Hessian of the objective function at the optimum, it serves as the starting point which enables us design practical variants thereof with strong theoretical guarantees. In particular, we design a stochastic sparsification strategy for learning the unknown parameters in an iterative fashion in a communication efficient manner. Applying this strategy to NEWTON-STAR leads to our next method, NEWTON-LEARN, for which we prove local linear and superlinear rates independent of the condition number. When applicable, this method can have dramatically superior convergence behavior when compared to state-of-the-art methods. Finally, we develop a globalization strategy using cubic regularization which leads to our next method, CUBIC-NEWTON-LEARN, for which we prove global sublinear and linear convergence rates, and a fast superlinear rate. Our results are supported with experimental results on real datasets, and show several orders of magnitude improvement on baseline and state-of-the-art methods in terms of communication complexity.

More material:

- Short 5 min YouTube talk by Rustem

- Long 80 min YouTube talk by myself delivered at the FLOW seminar

- my FLOW talk slides

- poster

4) "Stochastic Sign Descent Methods: New Algorithms and Better Theory" [arXiv] [ICML] - joint work with Mher Safaryan.

Abstract: Various gradient compression schemes have been proposed to mitigate the communication cost in distributed training of large scale machine learning models. Sign-based methods, such as signSGD, have recently been gaining popularity because of their simple compression rule and connection to adaptive gradient methods, like ADAM. In this paper, we analyze sign-based methods for non-convex optimization in three key settings: (i) standard single node, (ii) parallel with shared data and (iii) distributed with partitioned data. For single machine case, we generalize the previous analysis of signSGD relying on intuitive bounds on success probabilities and allowing even biased estimators. Furthermore, we extend the analysis to parallel setting within a parameter server framework, where exponentially fast noise reduction is guaranteed with respect to number of nodes, maintaining 1-bit compression in both directions and using small mini-batch sizes. Next, we identify a fundamental issue with signSGD to converge in distributed environment. To resolve this issue, we propose a new sign-based method, Stochastic Sign Descent with Momentum (SSDM), which converges under standard bounded variance assumption with the optimal asymptotic rate. We validate several aspects of our theoretical findings with numerical experiments.

More material:

5) "ADOM: Accelerated Decentralized Optimization Method for Time-Varying Networks" [arXiv] [ICML] - joint work with Dmitry Kovalev, Egor Shulgin, Alexander Rogozin and Alexander Gasnikov.

Abstract: We propose ADOM - an accelerated method for smooth and strongly convex decentralized optimization over time-varying networks. ADOM uses a dual oracle, i.e., we assume access to the gradient of the Fenchel conjugate of the individual loss functions. Up to a constant factor, which depends on the network structure only, its communication complexity is the same as that of accelerated Nesterov gradient method (Nesterov, 2003). To the best of our knowledge, only the algorithm of Rogozin et al. (2019) has a convergence rate with similar properties. However, their algorithm converges under the very restrictive assumption that the number of network changes can not be greater than a tiny percentage of the number of iterations. This assumption is hard to satisfy in practice, as the network topology changes usually can not be controlled. In contrast, ADOM merely requires the network to stay connected throughout time.

More material:

April 29, 2021

Paper Accepted to IEEE Transactions on Information Theory

Our paper Revisiting randomized gossip algorithms: general framework, convergence rates and novel block and accelerated protocols, joint work with Nicolas Loizou, was accepted to IEEE Transactions on Information Theory.

April 28, 2021

KAUST Conference on Artificial Intelligence: 17 Short (up to 5 min) Talks by Members of my Team!

Today and tomorrow I am attending the KAUST Conference on Artificial Intelligence. Anyone can attend for free by watching the LIVE Zoom webinar stream. Today I have given a short 20 min talk today entitled "Recent Advances in Optimization for Machine Learning". Here are my slides:

I will deliver another 20 min talk tomorrow, entitled "On Solving a Key Challenge in Federated Learning: Local Steps, Compression and Personalization". Here are the slides:

More importantly, 17 members (research scientists, postdocs, PhD students, MS students and interns) of the "Optimization and Machine Learning Lab" that I lead at KAUST have prepared short videos on selected recent papers they co-athored. This includes 9 papers from 2021, 7 papers from 2020 and 1 paper from 2019. Please check out their video talks! Here they are:

A talk by Konstantin Burlachenko (paper):

A talk by Laurent Condat (paper):

A talk by Eduard Gorbunov (paper):

A talk by Filip Hanzely (paper):

A talk by Slavomir Hanzely:

A talk by Samuel Horvath:

A talk by Rustem Islamov:

A talk by Ahmed Khaled:

A talk by Dmitry Kovalev:

A talk by Zhize Li:

A talk by Grigory Malinovsky:

A talk by Konstantin Mishchenko:

A talk by Xun Qian:

A talk by Mher Safaryan:

A talk by Adil Salim:

A talk by Egor Shulgin:

A talk by Bokun Wang:

April 21, 2021

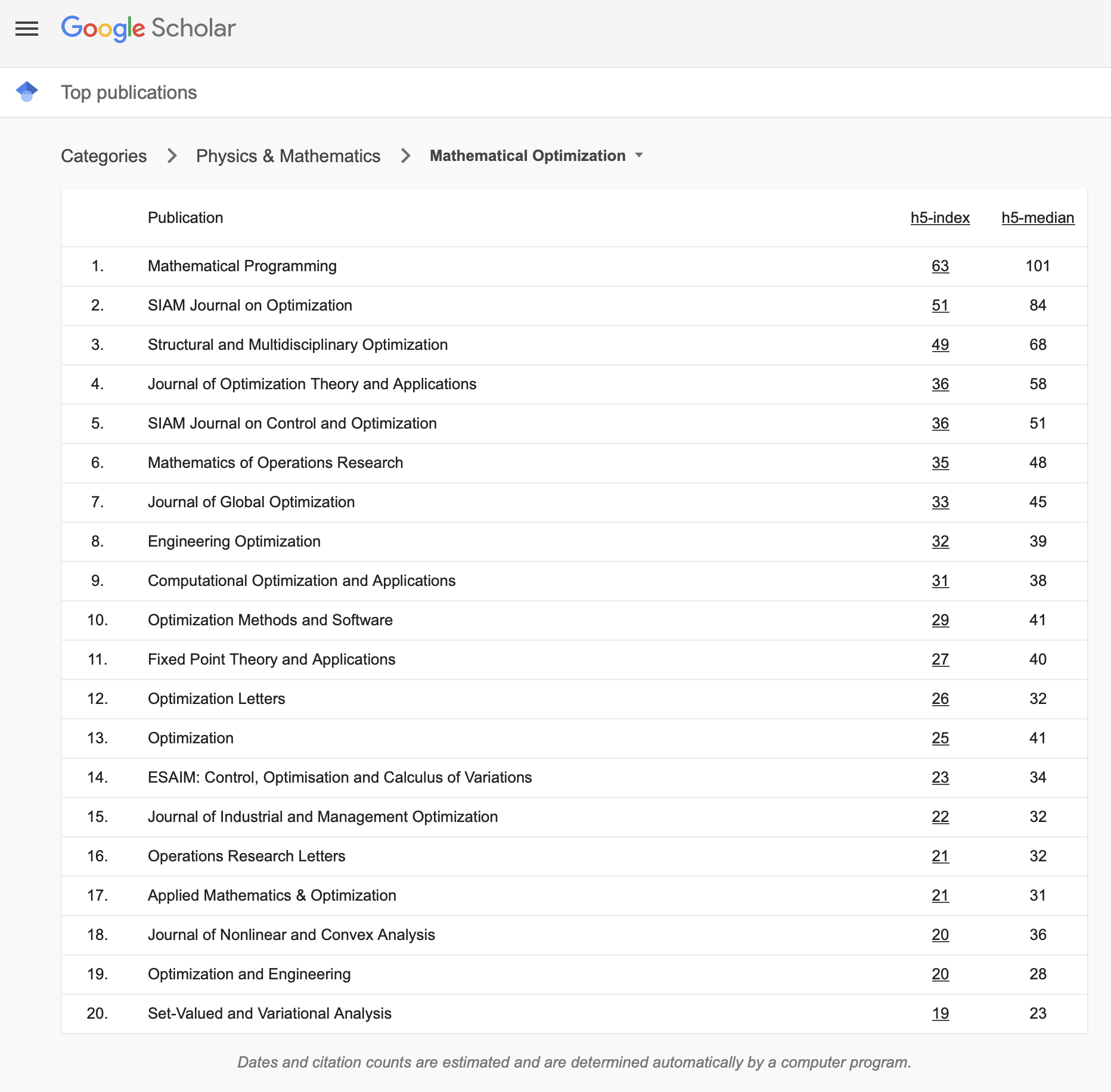

Area Editor for Journal of Optimization Theory and Applications

I have just become an Area Editor for Journal on Optimization Theory and Applications (JOTA), representing the area "Optimization and Machine Learning". Consider sending your best optimizaiton for machine learning papers to JOTA! We aim to provide fast and high quality reviews.

Established in 1967, JOTA is one of the oldest optimization journals. For example, Mathematical Programming was established in 1972, SIAM J on Control and Optimization in 1976, and SIAM J on Optimization in 1991.

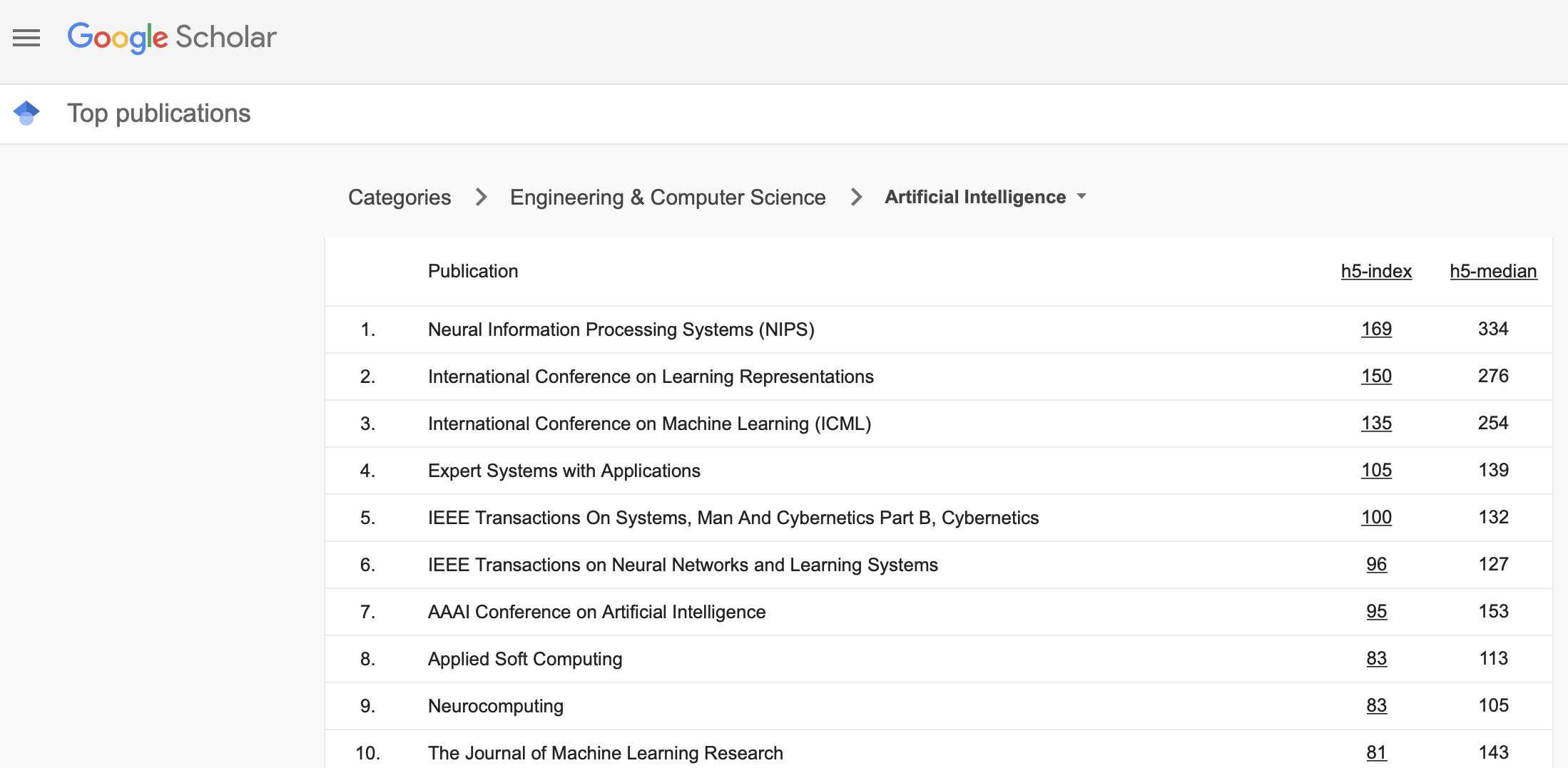

According to Google Scholar Metrics, JOTA is one of the top optimization journals:

April 22, 2021

Talk at AMCS/STAT Graduate Seminar at KAUST

Today I gave a talk entitled "Distributed second order methods with fast rates and compressed communication" at the AMCS/STAT Graduate Seminar at KAUST. Here is the official KAUST blurb. I talked about the paper Distributed Second Order Methods with Fast Rates and Compressed Communication. This is joint work with my fantastic intern Rustem Islamov (KAUST and MIPT) and fantastic postdoc Xun Qian (KAUST).

April 19, 2021

New Paper

New paper out: "Random Reshuffling with Variance Reduction: New Analysis and Better Rates" - joint work with Grigory Malinovsky and Alibek Sailanbayev.

Abstract: Virtually all state-of-the-art methods for training supervised machine learning models are variants of SGD enhanced with a number of additional tricks, such as minibatching, momentum, and adaptive stepsizes. One of the tricks that works so well in practice that it is used as default in virtually all widely used machine learning software is {\em random reshuffling (RR)}. However, the practical benefits of RR have until very recently been eluding attempts at being satisfactorily explained using theory. Motivated by recent development due to Mishchenko, Khaled and Richt\'{a}rik (2020), in this work we provide the first analysis of SVRG under Random Reshuffling (RR-SVRG) for general finite-sum problems. First, we show that RR-SVRG converges linearly with the rate $O(\kappa^{3/2})$ in the strongly-convex case, and can be improved further to $O(\kappa)$ in the big data regime (when $n > O(\kappa)$), where $\kappa$ is the condition number. This improves upon the previous best rate $O(\kappa^2)$ known for a variance reduced RR method in the strongly-convex case due to Ying, Yuan and Sayed (2020). Second, we obtain the first sublinear rate for general convex problems. Third, we establish similar fast rates for Cyclic-SVRG and Shuffle-Once-SVRG. Finally, we develop and analyze a more general variance reduction scheme for RR, which allows for less frequent updates of the control variate. We corroborate our theoretical results with suitably chosen experiments on synthetic and real datasets.

April 14, 2021

Talk at FLOW

Today I am giving a talk entitled "Beyond Local and Gradient Methods for Federated Learning" at the Federated Learning One World Seminar (FLOW). After a brief motivation spent on bashing gradient and local methods, I will talk about the paper Distributed Second Order Methods with Fast Rates and Compressed Communication. This is joint work with my fantastic intern Rustem Islamov (KAUST and MIPT) and fantastic postdoc Xun Qian (KAUST).

The talk was recorded and is now available on YouTube:

April 13, 2021

Three Papers Presented to AISTATS 2021

We've had three papers accepted to The 24th International Conference on Artificial Intelligence and Statistics (AISTATS 2021). The conference will be held virtually over the next few days; during April 13-15, 2021. Here are the papers:

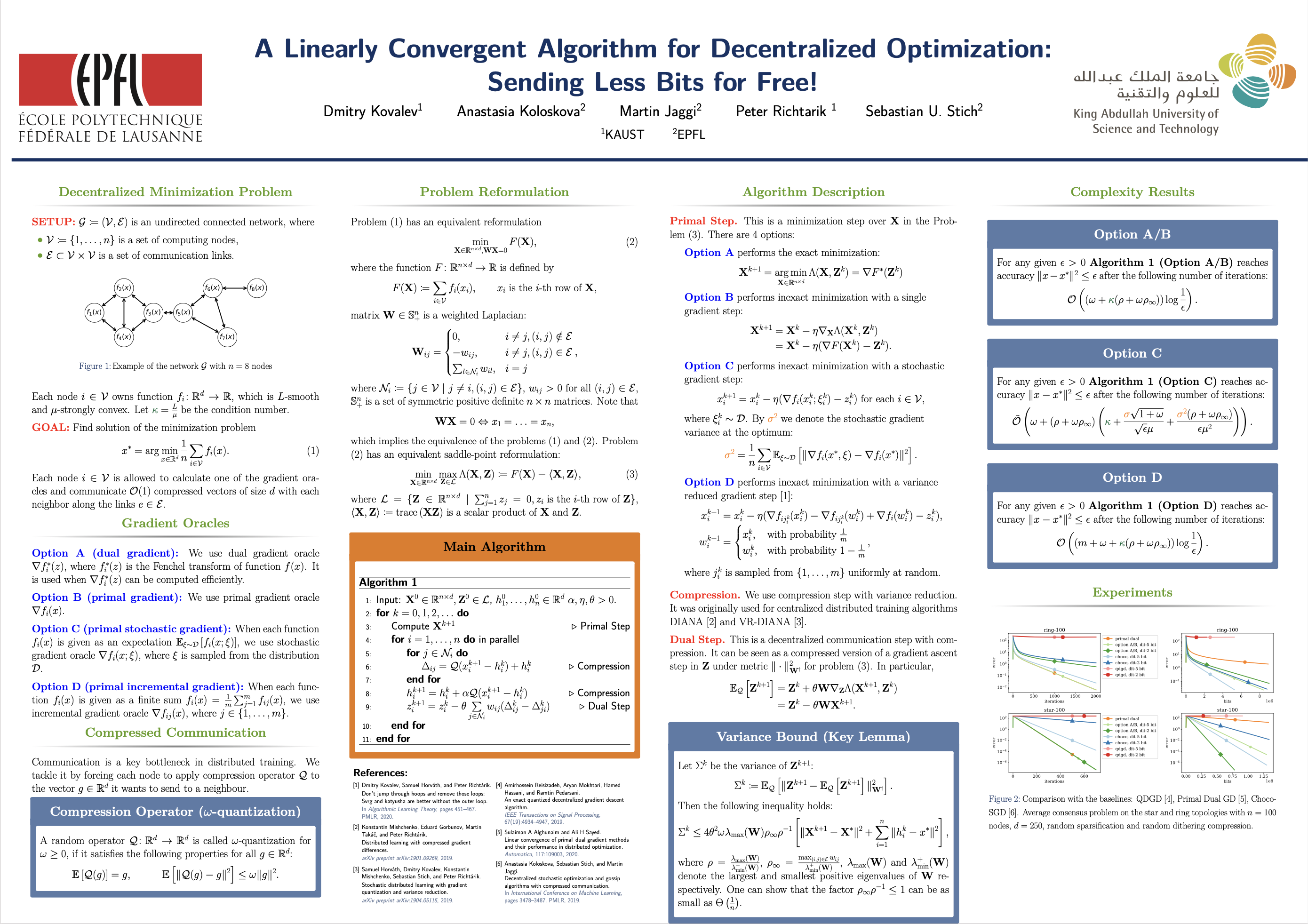

1. A linearly convergent algorithm for decentralized optimization: sending less bits for free!, joint work with Dmitry Kovalev, Anastasia Koloskova, Martin Jaggi, and Sebastian U. Stich.

Abstract: Decentralized optimization methods enable on-device training of machine learning models without a central coordinator. In many scenarios communication between devices is energy demanding and time consuming and forms the bottleneck of the entire system. We propose a new randomized first-order method which tackles the communication bottleneck by applying randomized compression operators to the communicated messages. By combining our scheme with a new variance reduction technique that progressively throughout the iterations reduces the adverse effect of the injected quantization noise, we obtain the first scheme that converges linearly on strongly convex decentralized problems while using compressed communication only. We prove that our method can solve the problems without any increase in the number of communications compared to the baseline which does not perform any communication compression while still allowing for a significant compression factor which depends on the conditioning of the problem and the topology of the network. Our key theoretical findings are supported by numerical experiments.

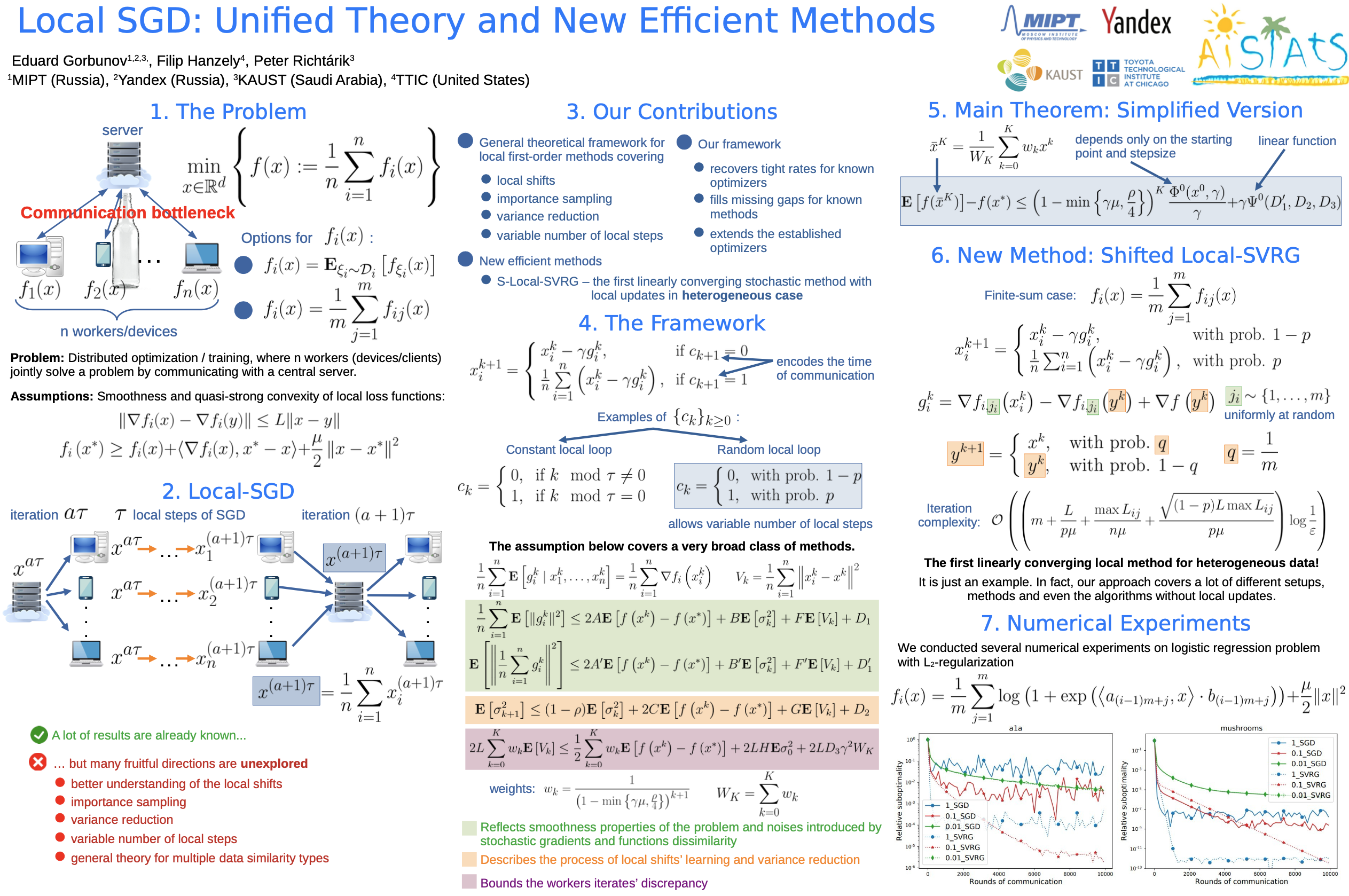

2. Local SGD: unified theory and new efficient methods, joint work with Eduard Gorbunov and Filip Hanzely.

Abstract: We present a unified framework for analyzing local SGD methods in the convex and strongly convex regimes for distributed/federated training of supervised machine learning models. We recover several known methods as a special case of our general framework, including Local-SGD/FedAvg, SCAFFOLD, and several variants of SGD not originally designed for federated learning. Our framework covers both the identical and heterogeneous data settings, supports both random and deterministic number of local steps, and can work with a wide array of local stochastic gradient estimators, including shifted estimators which are able to adjust the fixed points of local iterations for faster convergence. As an application of our framework, we develop multiple novel FL optimizers which are superior to existing methods. In particular, we develop the first linearly converging local SGD method which does not require any data homogeneity or other strong assumptions.

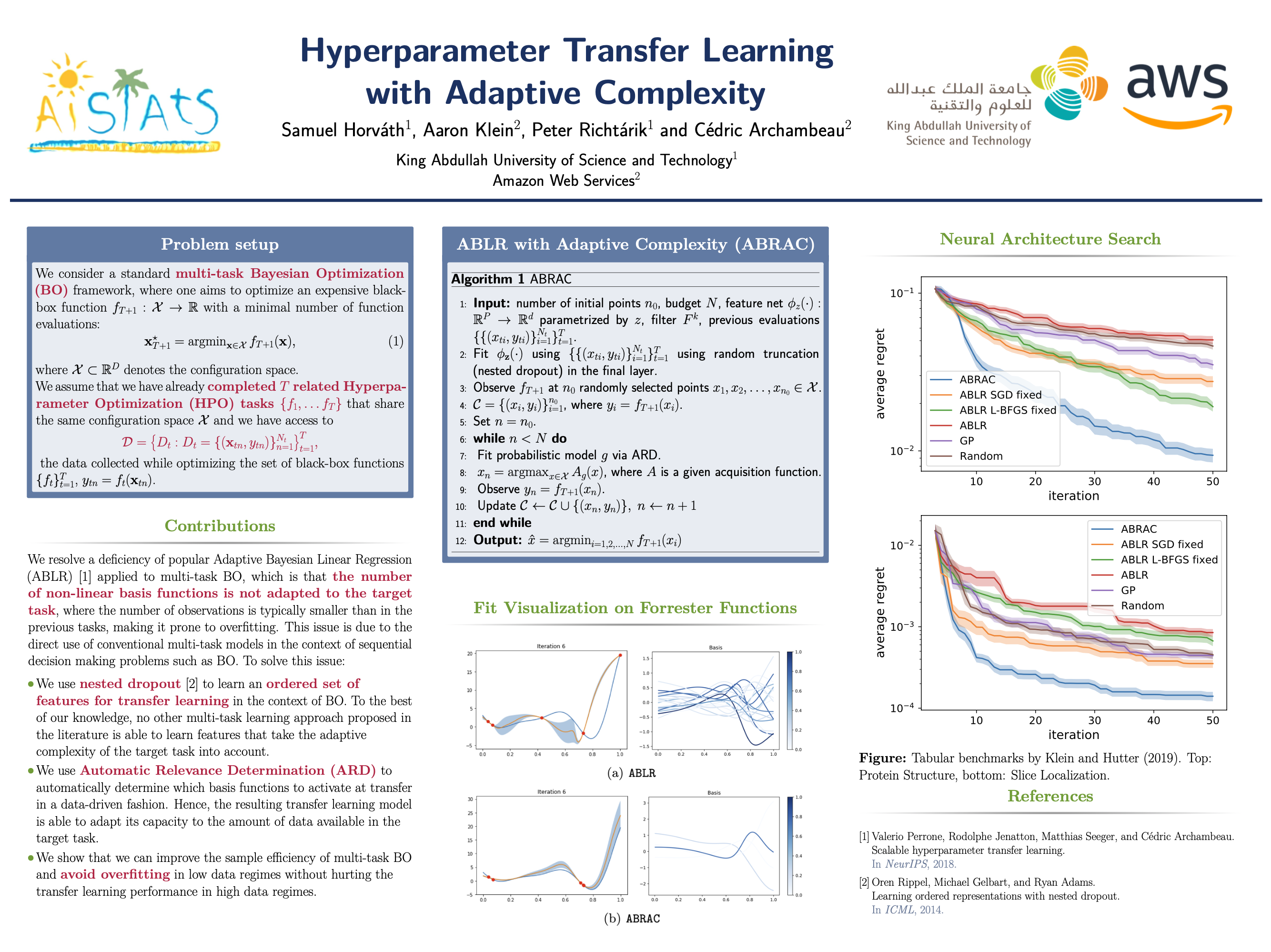

3. Hyperparameter transfer learning with adaptive complexity, joint work with Samuel Horváth and Aaron Klein, and Cedric Archambeau.

Abstract: Bayesian optimization (BO) is a data-efficient approach to automatically tune the hyperparameters of machine learning models. In practice, one frequently has to solve similar hyperparameter tuning problems sequentially. For example, one might have to tune a type of neural network learned across a series of different classification problems. Recent work on multi-task BO exploits knowledge gained from previous hyperparameter tuning tasks to speed up a new tuning task. However, previous approaches do not account for the fact that BO is a sequential decision making procedure. Hence, there is in general a mismatch between the number of evaluations collected in the current tuning task compared to the number of evaluations accumulated in all previously completed tasks. In this work, we enable multi-task BO to compensate for this mismatch, such that the transfer learning procedure is able to handle different data regimes in a principled way. We propose a new multi-task BO method that learns a set of ordered, non-linear basis functions of increasing complexity via nested drop-out and automatic relevance determination. Experiments on a variety of hyperparameter tuning problems show that our method improves the sample efficiency of recently published multi-task BO methods.

April 7, 2021

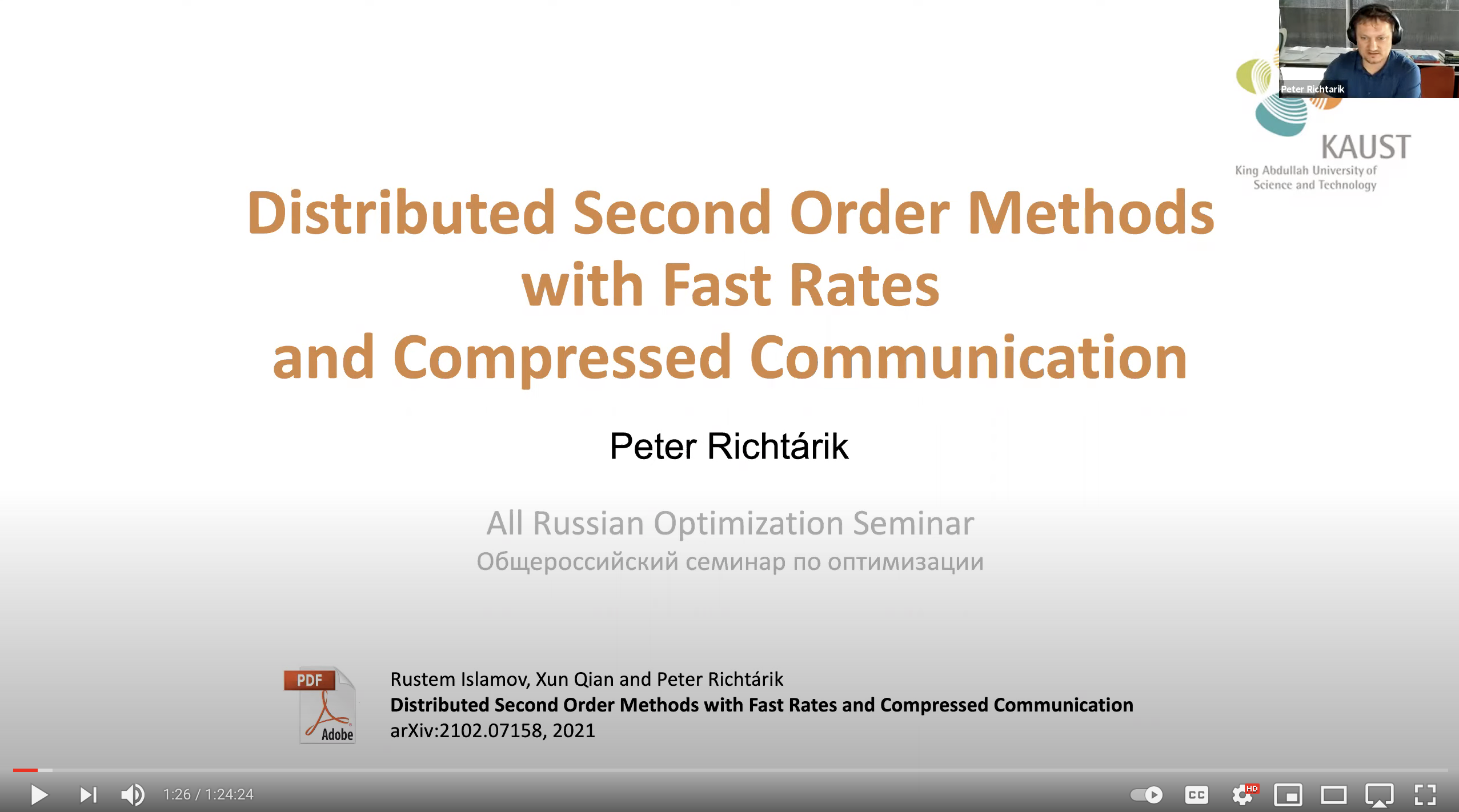

Talk at All Russian Seminar in Optimization

Today I am giving a talk at the All Russian Seminar in Optimization. I am talking about the paper Distributed Second Order Methods with Fast Rates and Compressed Communication, which is joint work with Rustem Islamov (KAUST and MIPT) and Xun Qian (KAUST).

The talk was recorded and uploaded to YouTube:

Here are the slides from my talk, and here is a poster that will son be presented by Rustem Islamov at the NSF-TRIPODS Workshop on Communication Efficient Distributed Optimization.

March 24, 2021

Mishchenko and Gorbunov: ICLR 2021 Outstanding Reviewer Award

Congratulations Konstantin Mishchenko and Eduard Gorbunov for receiving an Outstanding Reviewer Award from ICLR 2021! I wish the reviews we get for our papers were as good (i.e., insighful, expert and thorough) as the reviews Konstantin and Eduard are writing.

March 19, 2021

Area Chair for NeurIPS 2021

I will serve as an Area Chair for NeurIPS 2021, to be held during December 6-14, 2021 virtually ( = same location as last year ;-).

March 1, 2021

New PhD Student: Lukang Sun

Lukang Sun has joined my group as a PhD student. Welcome!!! Lukang has an MPhil degree in mathematics form Nanjing University, China (2020), and a BA in mathematics from Jilin University, China (2017). His thesis (written in Chinese) was on the topic of "Harmonic functions on metric measure spaces". In this work, Lukang proposed some novel methods using optimal transport theory to generalize some results from Riemannian manifolds to metric measure spaces. Lukang has held visiting/exchange/temporary positions at the Hong Kong University of Science and Technology, Georgia Institute of Technology, and the Chinese University of Hong Kong.

February 22, 2021

New Paper

New paper out: "An Optimal Algorithm for Strongly Convex Minimization under Affine Constraints" - joint work with Adil Salim, Laurent Condat and Dmitry Kovalev.

Abstract: Optimization problems under affine constraints appear in various areas of machine learning. We consider the task of minimizing a smooth strongly convex function $F(x)$ under the affine constraint $Kx=b$, with an oracle providing evaluations of the gradient of $F$ and matrix-vector multiplications by $K$ and its transpose. We provide lower bounds on the number of gradient computations and matrix-vector multiplications to achieve a given accuracy. Then we propose an accelerated primal--dual algorithm achieving these lower bounds. Our algorithm is the first optimal algorithm for this class of problems.

February 19, 2021

New Paper

New paper out: "AI-SARAH: Adaptive and Implicit Stochastic Recursive Gradient Methods" - joint work with Zheng Shi, Nicolas Loizou and Martin Takáč.

Abstract: We present an adaptive stochastic variance reduced method with an implicit approach for adaptivity. As a variant of SARAH, our method employs the stochastic recursive gradient yet adjusts step-size based on local geometry. We provide convergence guarantees for finite-sum minimization problems and show a faster convergence than SARAH can be achieved if local geometry permits. Furthermore, we propose a practical, fully adaptive variant, which does not require any knowledge of local geometry and any effort of tuning the hyper-parameters. This algorithm implicitly computes step-size and efficiently estimates local Lipschitz smoothness of stochastic functions. The numerical experiments demonstrate the algorithm's strong performance compared to its classical counterparts and other state-of-the-art first-order methods.

February 18, 2021

New Paper

New paper out: "ADOM: Accelerated Decentralized Optimization Method for Time-Varying Networks" - joint work with Dmitry Kovalev, Egor Shulgin, Alexander Rogozin, and Alexander Gasnikov.

Abstract: We propose ADOM - an accelerated method for smooth and strongly convex decentralized optimization over time-varying networks. ADOM uses a dual oracle, i.e., we assume access to the gradient of the Fenchel conjugate of the individual loss functions. Up to a constant factor, which depends on the network structure only, its communication complexity is the same as that of accelerated Nesterov gradient method (Nesterov, 2003). To the best of our knowledge, only the algorithm of Rogozin et al. (2019) has a convergence rate with similar properties. However, their algorithm converges under the very restrictive assumption that the number of network changes can not be greater than a tiny percentage of the number of iterations. This assumption is hard to satisfy in practice, as the network topology changes usually can not be controlled. In contrast, ADOM merely requires the network to stay connected throughout time.

February 16, 2021

New Paper

New paper out: "IntSGD: Floatless Compression of Stochastic Gradients" - joint work with Konstantin Mishchenko, and Bokun Wang and Dmitry Kovalev.

Abstract: We propose a family of lossy integer compressions for Stochastic Gradient Descent (SGD) that do not communicate a single float. This is achieved by multiplying floating-point vectors with a number known to every device and then rounding to an integer number. Our theory shows that the iteration complexity of SGD does not change up to constant factors when the vectors are scaled properly. Moreover, this holds for both convex and non-convex functions, with and without overparameterization. In contrast to other compression-based algorithms, ours preserves the convergence rate of SGD even on non-smooth problems. Finally, we show that when the data is significantly heterogeneous, it may become increasingly hard to keep the integers bounded and propose an alternative algorithm, IntDIANA, to solve this type of problems.

February 16, 2021

Talk at MBZUAI

Today I gave a research seminar talk at MBZUAI. I spoke about randomized second order methods.

February 15, 2021

New Paper

New paper out: "MARINA: Faster Non-Convex Distributed Learning with Compression" - joint work with Eduard Gorbunov, and Konstantin Burlachenko and Zhize Li.

Abstract: We develop and analyze MARINA: a new communication efficient method for non-convex distributed learning over heterogeneous datasets. MARINA employs a novel communication compression strategy based on the compression of gradient differences which is reminiscent of but different from the strategy employed in the DIANA method of Mishchenko et al (2019). Unlike virtually all competing distributed first-order methods, including DIANA, ours is based on a carefully designed biased gradient estimator, which is the key to its superior theoretical and practical performance. To the best of our knowledge, the communication complexity bounds we prove for MARINA are strictly superior to those of all previous first order methods. Further, we develop and analyze two variants of MARINA: VR-MARINA and PP-MARINA. The first method is designed for the case when the local loss functions owned by clients are either of a finite sum or of an expectation form, and the second method allows for partial participation of clients -- a feature important in federated learning. All our methods are superior to previous state-of-the-art methods in terms of the oracle/communication complexity. Finally, we provide convergence analysis of all methods for problems satisfying the Polyak-Lojasiewicz condition.

February 14, 2021

New Paper

New paper out: "Smoothness Matrices Beat Smoothness Constants: Better Communication Compression Techniques for Distributed Optimization" - joint work with Mher Safaryan, and Filip Hanzely.

Abstract: Large scale distributed optimization has become the default tool for the training of supervised machine learning models with a large number of parameters and training data. Recent advancements in the field provide several mechanisms for speeding up the training, including compressed communication, variance reduction and acceleration. However, none of these methods is capable of exploiting the inherently rich data-dependent smoothness structure of the local losses beyond standard smoothness constants. In this paper, we argue that when training supervised models, smoothness matrices -- information-rich generalizations of the ubiquitous smoothness constants -- can and should be exploited for further dramatic gains, both in theory and practice. In order to further alleviate the communication burden inherent in distributed optimization, we propose a novel communication sparsification strategy that can take full advantage of the smoothness matrices associated with local losses. To showcase the power of this tool, we describe how our sparsification technique can be adapted to three distributed optimization algorithms -- DCGD, DIANA and ADIANA -- yielding significant savings in terms of communication complexity. The new methods always outperform the baselines, often dramatically so.

February 13, 2021

New Paper

New paper out: "Distributed Second Order Methods with Fast Rates and Compressed Communication" - joint work with Rustem Islamov, and Xun Qian.

Abstract: We develop several new communication-efficient second-order methods for distributed optimization. Our first method, NEWTON-STAR, is a variant of Newton's method from which it inherits its fast local quadratic rate. However, unlike Newton's method, NEWTON-STAR enjoys the same per iteration communication cost as gradient descent. While this method is impractical as it relies on the use of certain unknown parameters characterizing the Hessian of the objective function at the optimum, it serves as the starting point which enables us design practical variants thereof with strong theoretical guarantees. In particular, we design a stochastic sparsification strategy for learning the unknown parameters in an iterative fashion in a communication efficient manner. Applying this strategy to NEWTON-STAR leads to our next method, NEWTON-LEARN, for which we prove local linear and superlinear rates independent of the condition number. When applicable, this method can have dramatically superior convergence behavior when compared to state-of-the-art methods. Finally, we develop a globalization strategy using cubic regularization which leads to our next method, CUBIC-NEWTON-LEARN, for which we prove global sublinear and linear convergence rates, and a fast superlinear rate. Our results are supported with experimental results on real datasets, and show several orders of magnitude improvement on baseline and state-of-the-art methods in terms of communication complexity.

February 12, 2021

New Paper

New paper out: "Proximal and Federated Random Reshuffling" - joint work with Konstantin Mishchenko, and Ahmed Khaled.

Abstract: Random Reshuffling (RR), also known as Stochastic Gradient Descent (SGD) without replacement, is a popular and theoretically grounded method for finite-sum minimization. We propose two new algorithms: Proximal and Federated Random Reshuffing (ProxRR and FedRR). The first algorithm, ProxRR, solves composite convex finite-sum minimization problems in which the objective is the sum of a (potentially non-smooth) convex regularizer and an average of n smooth objectives. We obtain the second algorithm, FedRR, as a special case of ProxRR applied to a reformulation of distributed problems with either homogeneous or heterogeneous data. We study the algorithms' convergence properties with constant and decreasing stepsizes, and show that they have considerable advantages over Proximal and Local SGD. In particular, our methods have superior complexities and ProxRR evaluates the proximal operator once per epoch only. When the proximal operator is expensive to compute, this small difference makes ProxRR up to n times faster than algorithms that evaluate the proximal operator in every iteration. We give examples of practical optimization tasks where the proximal operator is difficult to compute and ProxRR has a clear advantage. Finally, we corroborate our results with experiments on real data sets.

February 10, 2021

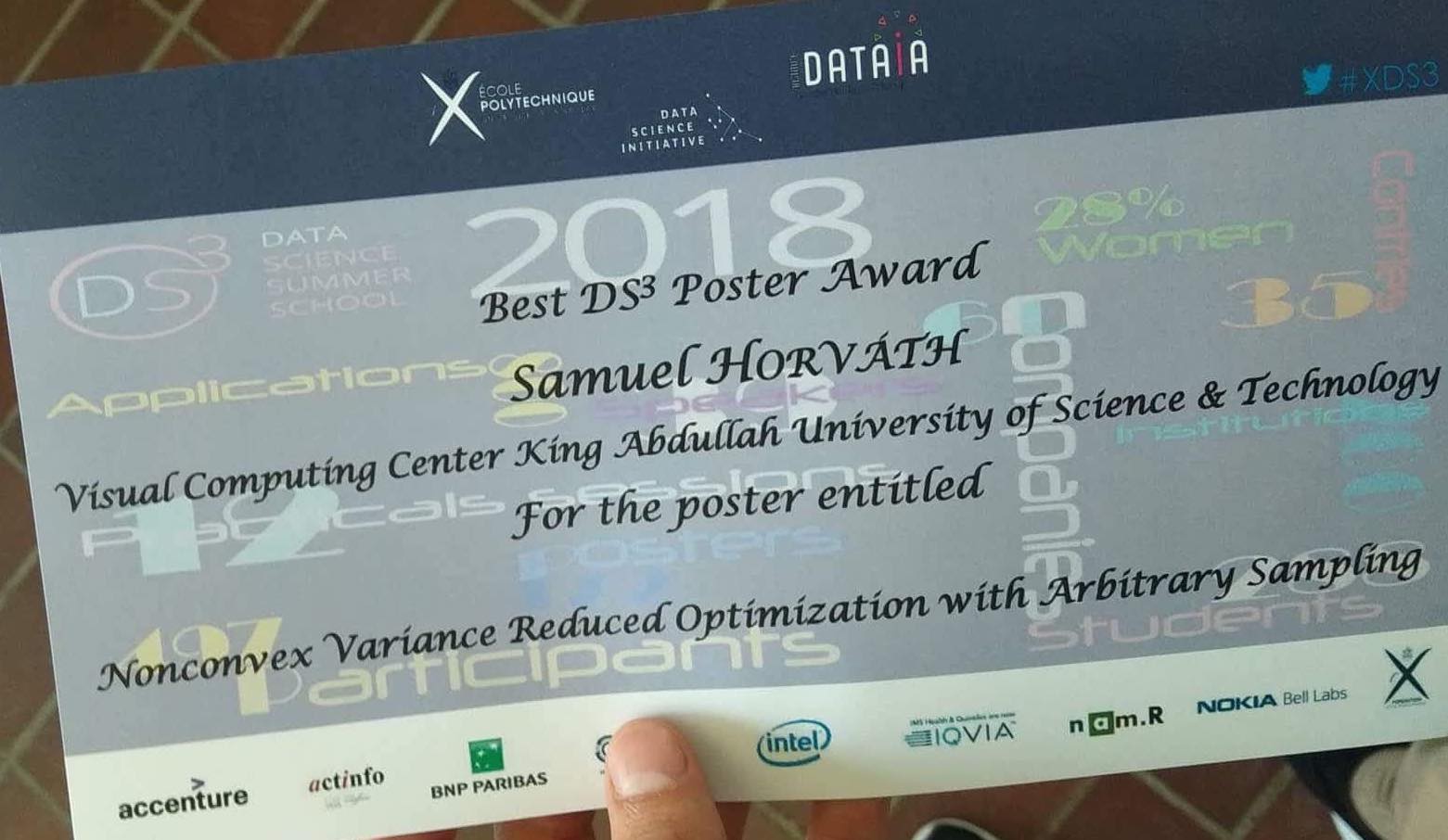

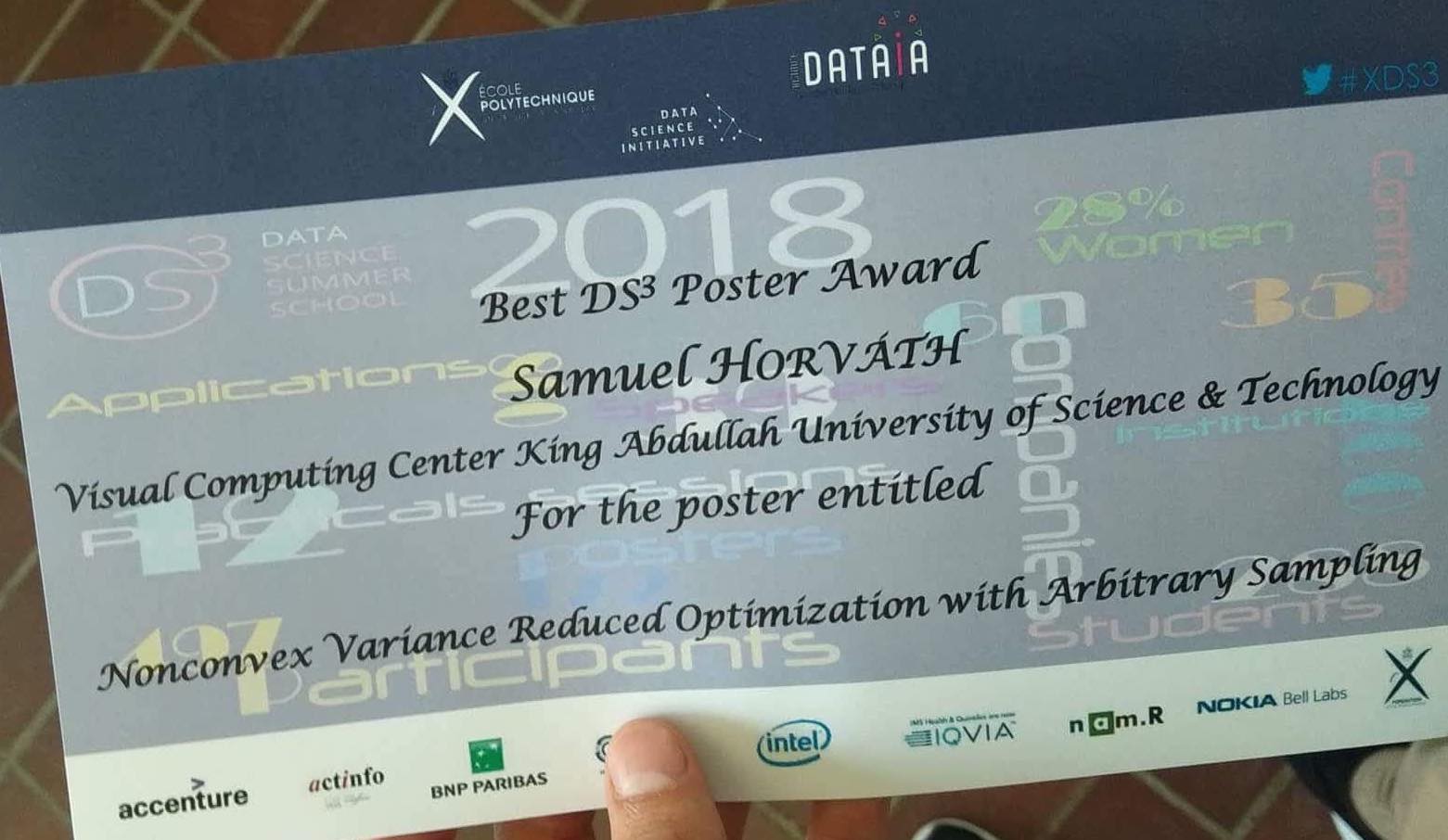

Best Paper Award @ NeurIPS SipcyFL 2020

Super happy about this surprise prize; and huge congratulations to my outstanding student and collaborator Samuel Horváth. The paper was recently accepted to ICLR 2021, check it out!

January 24, 2021

Spring 2021 Semester Starts at KAUST

As of today, the Spring semester starts at KAUST. The timing of this every year conflicts with the endgame before the ICML submission deadline, and this year is no different. Except for Covid-19. I am teaching CS 332: Federated Learning on Sundays and Tuesdays. The first class is today.

January 23, 2021

Three Papers Accepted to AISTATS 2021

We've had some papers accepted to The 24th International Conference on Artificial Intelligence and Statistics (AISTATS 2021). The conference will be held virtually during April 13-15, 2021. Here are the papers:

1. A linearly convergent algorithm for decentralized optimization: sending less bits for free!, joint work with Dmitry Kovalev, Anastasia Koloskova, Martin Jaggi, and Sebastian U. Stich.

Abstract: Decentralized optimization methods enable on-device training of machine learning models without a central coordinator. In many scenarios communication between devices is energy demanding and time consuming and forms the bottleneck of the entire system. We propose a new randomized first-order method which tackles the communication bottleneck by applying randomized compression operators to the communicated messages. By combining our scheme with a new variance reduction technique that progressively throughout the iterations reduces the adverse effect of the injected quantization noise, we obtain the first scheme that converges linearly on strongly convex decentralized problems while using compressed communication only. We prove that our method can solve the problems without any increase in the number of communications compared to the baseline which does not perform any communication compression while still allowing for a significant compression factor which depends on the conditioning of the problem and the topology of the network. Our key theoretical findings are supported by numerical experiments.

2. Local SGD: unified theory and new efficient methods, joint work with Eduard Gorbunov and Filip Hanzely.

Abstract: We present a unified framework for analyzing local SGD methods in the convex and strongly convex regimes for distributed/federated training of supervised machine learning models. We recover several known methods as a special case of our general framework, including Local-SGD/FedAvg, SCAFFOLD, and several variants of SGD not originally designed for federated learning. Our framework covers both the identical and heterogeneous data settings, supports both random and deterministic number of local steps, and can work with a wide array of local stochastic gradient estimators, including shifted estimators which are able to adjust the fixed points of local iterations for faster convergence. As an application of our framework, we develop multiple novel FL optimizers which are superior to existing methods. In particular, we develop the first linearly converging local SGD method which does not require any data homogeneity or other strong assumptions.

3. Hyperparameter transfer learning with adaptive complexity, joint work with Samuel Horváth and Aaron Klein, and Cedric Archambeau.

Abstract: Bayesian optimization (BO) is a data-efficient approach to automatically tune the hyperparameters of machine learning models. In practice, one frequently has to solve similar hyperparameter tuning problems sequentially. For example, one might have to tune a type of neural network learned across a series of different classification problems. Recent work on multi-task BO exploits knowledge gained from previous hyperparameter tuning tasks to speed up a new tuning task. However, previous approaches do not account for the fact that BO is a sequential decision making procedure. Hence, there is in general a mismatch between the number of evaluations collected in the current tuning task compared to the number of evaluations accumulated in all previously completed tasks. In this work, we enable multi-task BO to compensate for this mismatch, such that the transfer learning procedure is able to handle different data regimes in a principled way. We propose a new multi-task BO method that learns a set of ordered, non-linear basis functions of increasing complexity via nested drop-out and automatic relevance determination. Experiments on a variety of hyperparameter tuning problems show that our method improves the sample efficiency of recently published multi-task BO methods.

January 22, 2021

Paper Accepted to Information and Inference: A Journal of the IMA

Our paper "Uncertainty Principle for Communication Compression in Distributed and Federated Learning and the Search for an Optimal Compressor”, joint work with Mher Safaryan and Egor Shulgin, was accepted to Information and Inference: A Journal of the IMA.

Abstract: In order to mitigate the high communication cost in distributed and federated learning, various vector compression schemes, such as quantization, sparsification and dithering, have become very popular. In designing a compression method, one aims to communicate as few bits as possible, which minimizes the cost per communication round, while at the same time attempting to impart as little distortion (variance) to the communicated messages as possible, which minimizes the adverse effect of the compression on the overall number of communication rounds. However, intuitively, these two goals are fundamentally in conflict: the more compression we allow, the more distorted the messages become. We formalize this intuition and prove an uncertainty principle for randomized compression operators, thus quantifying this limitation mathematically, and effectively providing asymptotically tight lower bounds on what might be achievable with communication compression. Motivated by these developments, we call for the search for the optimal compression operator. In an attempt to take a first step in this direction, we consider an unbiased compression method inspired by the Kashin representation of vectors, which we call Kashin compression (KC). In contrast to all previously proposed compression mechanisms, KC enjoys a dimension independent variance bound for which we derive an explicit formula even in the regime when only a few bits need to be communicate per each vector entry.

January 12, 2021

Paper Accepted to ICLR 2021

Our paper "A Better Alternative to Error Feedback for Communication-efficient Distributed Learning'', joint work with Samuel Horváth, was accepted to The 9th International Conference on Learning Representations (ICLR 2021).

Abstract: Modern large-scale machine learning applications require stochastic optimization algorithms to be implemented on distributed compute systems. A key bottleneck of such systems is the communication overhead for exchanging information across the workers, such as stochastic gradients. Among the many techniques proposed to remedy this issue, one of the most successful is the framework of compressed communication with error feedback (EF). EF remains the only known technique that can deal with the error induced by contractive compressors which are not unbiased, such as Top-K. In this paper, we propose a new and theoretically and practically better alternative to EF for dealing with contractive compressors. In particular, we propose a construction which can transform any contractive compressor into an induced unbiased compressor. Following this transformation, existing methods able to work with unbiased compressors can be applied. We show that our approach leads to vast improvements over EF, including reduced memory requirements, better communication complexity guarantees and fewer assumptions. We further extend our results to federated learning with partial participation following an arbitrary distribution over the nodes, and demonstrate the benefits thereof. We perform several numerical experiments which validate our theoretical findings.

January 11, 2021

Call for Al-Khwarizmi Doctoral Fellowships (apply by Jan 22, 2021)

If you are from Europe and want to apply for a PhD position in my Optimization and Machine Learning group at KAUST, you may wish to apply for the European Science Foundation Al-Khwarizmi Doctoral Fellowship.

Here is the official blurb:

"The Al-Khwarizmi Graduate Fellowship scheme invites applications for doctoral fellowships, with the submission deadline of 22 January 2021, 17:00 CET. The King Abdullah University of Science and Technology (KAUST) in the Kingdom of Saudi Arabia with support from the European Science Foundation (ESF) launches a competitive doctoral fellowship scheme to welcome students from the European continent for a research journey to a top international university in the Middle East. The applications will be evaluated via an independent peer-review process managed by the ESF. The selected applicants will be offered generous stipends and free tuition for Ph.D. studies within one of KAUST academic programs. Strong applicants who were not awarded a Fellowship but passed KAUST admission requirements will be offered the possibility to join the University as regular Ph.D. students with the standard benefits that include the usual stipends and free tuition."

- Submission deadline = 22 January 2021 @ 17:00 CET

- Duration of the Fellowship = 3 years (extensions may be considered in duly justified cases)

- Annual living allowance/stipend = USD 38,000 (net)

- Approx USD 50,000 annual benefits = free tuition, free student housing on campus, relocation support, and medical and dental coverage

- Each Fellowship includes a supplementary grant of USD 6,000 at the Fellow’s disposal for research-related expenses such as conference attendance

- The applications must be submitted in two steps, with the formal documents and transcripts to be submitted to KAUST Admissions in Step 1, and the research proposal to be submitted to the ESF in Step 2. Both steps should be completed in parallel before the call deadline.

December 15, 2020

Vacation

I am on vacation until early January, 2021.

December 12, 2020

Paper Accepted to NSDI 2021

Our paper ``Scaling Distributed Machine Learning with In-Network Aggregation'', joint work with Amedeo Sapio, Marco Canini, Chen-Yu Ho, Jacob Nelson, Panos Kalnis, Changhoon Kim, Arvind Krishnamurthy, Masoud Moshref, and Dan R. K. Ports, was accepted to The 18th USENIX Symposium on Networked Systems Design and Implementation (NSDI '21 Fall).

Abstract: Training machine learning models in parallel is an increasingly important workload. We accelerate distributed parallel training by designing a communication primitive that uses a programmable switch dataplane to execute a key step of the training process. Our approach, SwitchML, reduces the volume of exchanged data by aggregating the model updates from multiple workers in the network. We co-design the switch processing with the end-host protocols and ML frameworks to provide an efficient solution that speeds up training by up to 5.5× for a number of real-world benchmark models.

December 8, 2020

Fall 2020 Semester at KAUST is Over

The Fall 2020 semester at KAUST is now over; I've had alot of fun teaching my CS 331 class (Stochastic Gradient Descent Methods). At the very end I run into some LaTeX issues after upgrading to Big Sur on Mac - should not have done that...

December 6, 2020

NeurIPS 2020 Started

Me and the members of my group will be attending NeurIPS 2020 - the event is starting today. Marco Cuturi and me will co-chair the Optimization session (Track 21) on Wednesday. I am particularly looking forward to the workshops: OPT2020, PPML and SpicyFL.

November 24, 2020

New Paper

New paper out: "Error Compensated Loopless SVRG for Distributed Optimization" - joint work with Xun Qian, Hanze Dong, and Tong Zhang.

Abstract: A key bottleneck in distributed training of large scale machine learning models is the overhead related to communication of gradients. In order to reduce the communicated cost, gradient compression (e.g., sparsification and quantization) and error compensation techniques are often used. In this paper, we propose and study a new efficient method in this space: error compensated loopless SVRG method (L-SVRG). Our method is capable of working with any contraction compressor (e.g., TopK compressor), and we perform analysis for strongly convex optimization problems in the composite case and smooth case. We prove linear convergence rates for both cases and show that in the smooth case the rate has a better dependence on the contraction factor associated with the compressor. Further, we show that in the smooth case, and under some certain conditions, error compensated L-SVRG has the same convergence rate as the vanilla L-SVRG method. Numerical experiments are presented to illustrate the efficiency of our method.

November 24, 2020

New Paper

New paper out: "Error Compensated Proximal SGD and RDA" - joint work with Xun Qian, Hanze Dong, and Tong Zhang.